How to Develop Intuition for Security Research: Apply the Scientific Method

- Davy Marrero

- Jan 23, 2023

- 6 min read

Intuition plays a prominent role in security research. It guides a researcher’s thought process to determine how to prioritize system components for analysis. What is the source of intuition? Can its source be defined and systematized so new researchers can learn how to develop their intuition, and experienced researchers can further refine their own? Applying the Scientific Method to security research can do just that.

Security Research vs. Traditional Software Development

Over the years, I have worked with several engineers while they were transitioning to security research from traditional software development roles. These engineers, accustomed to well defined tasks, workflows, and milestones, were often challenged by the open-ended nature of security research. Most standard software development methodologies do not directly translate to security research. A vision for what a “minimum viable product” might be and how to get there is not apparent at the start of a project, and some research problems even turn out to be unsolvable. The missing component for many of these new security researchers: intuition.

The Role of Intuition in Security Research

Experienced engineers often rely on intuition—built from experience—to determine tasking and direction. They have seen enough software to know where to prioritize effort. However, what to an experienced engineer is worth investigating might not be apparent to someone new, leaving less experienced engineers feeling unempowered and unsure where to start. Newer security researchers developing their intuition need a framework to guide their decision-making process. Additionally, technical leads working with a team of less experienced engineers need techniques to define tasks and steer a project’s direction. The Scientific Method provides a roadmap for accomplishing these goals.

What is the Scientific Method?

In grade school science classes, students learn about the Scientific Method as a systematic and logical problem-solving approach. The modern definition of the Scientific Method can be traced back to philosopher and psychologist John Dewey’s “How We Think,” where he describes a system of inquiry as being divided into five steps:

A felt difficulty

Locating and defining the problem

The suggestion of a possible solution

Refining the suggestion

Testing whether the suggestion solves the problem

This methodology sounds like a process that software developers can relate to!

Today, the scientific method is commonly taught as a multistep process:

Make an observation

Ask a question

Form a hypothesis

Make a prediction based on the hypothesis

Test the prediction

Use the results to make a new hypothesis or prediction

Applying the Scientific Method to Security Research

So, how can we apply this process to security research? Typically, experienced engineers already do so—somewhat subconsciously. Presented with a new system or piece of software and an end goal (commonly remote code execution or local privilege escalation), we apply the Scientific Method intuitively. We observe how the software behaves under various conditions. We ask what attack vectors are most likely to lead to potential vulnerabilities. We formulate hypotheses about how we might exploit those vulnerabilities and design experiments to test those hypotheses. Finally, we collect and analyze data to determine the nature and severity of any discovered vulnerabilities. Let’s look at a hypothetical example.

Our task is to conduct a security audit of a web server that runs on a network device. While observing the behavior of the software (reading documentation, visiting various endpoints, examining HTTP headers, etc.), we find an endpoint used to configure the system. We ask the question, what types of vulnerabilities might be present? At this stage, some abstract thinking is necessary.

An experienced engineer’s intuition might lead to a hypothesis that a path-traversal or command-injection vulnerability might be present. Such an inference is possible because they have analyzed enough software to know where particular vulnerabilities can manifest. A less experienced engineer might need to gather additional background information before making such mental leaps independently—perhaps by getting familiar with the Common Weakness Enumeration (CWE) and gaining experience through capture-the-flag exercises. Technical mentorship is also key in helping less experienced engineers learn to make inferences.

Next, we hypothesize that we can gain control of the system through a command-injection vulnerability and predict that such a vulnerability might be present in the device configuration endpoint. We test our prediction using static- and dynamic-analysis tools and techniques. If successful, we can move to the next stage of our audit and develop a proof of concept. If not, we can use what we learned during this analysis phase to revise our test and confirm our results, or we may even go in a completely different direction and look at other software components. Along the way, we take notes that describe our test environment, steps taken, and results.

Improvements at the Team Level

These are loose guidelines that might seem obvious, but they help identify processes we take for granted and enable us to develop them further. The power of applying the Scientific Method to security research is that engineers can break down open-ended research into a series of discrete and well defined “next actions” akin to methodologies used in traditional software development. Each step in the Scientific Method brings forth a set of next actions that technical leads can assign to individual contributors. Let’s zoom out from our previous example and look at what tasks the Scientific Method defines at the team or organization level.

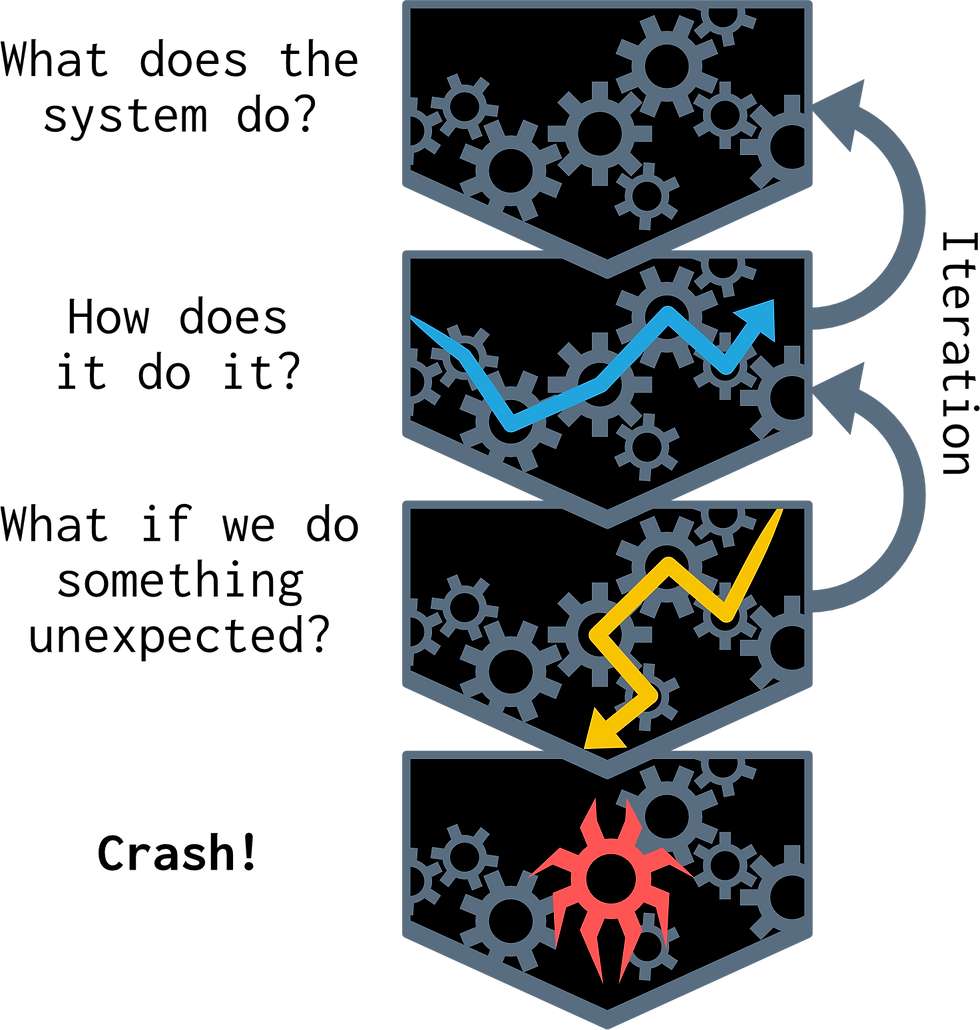

When starting an analysis of a new system, the first set of tasks is to make high-level observations by asking and answering surface-level “what” questions:

What does the system do at a high level?

What are its components?

What prior research exists?

We then go deeper in step two of the scientific method by asking “how”:

How does the system receive input?

How does it process that input?

How do we find any missing information needed to answer these questions?

At this stage, we are gathering information—resist the temptation to go too deep into the details. Depending on the subject under test, possible next actions might include the following:

Extracting firmware and loading it into a disassembler

Identifying input-parsing functionality in the extracted firmware

Locating and analyzing any usage of potentially unsafe library functions

Now that we have completed these tasks and gained sufficient program understanding, we form a well scoped hypothesis, make a prediction based on it, and develop a plan to test our prediction. For example, we might think, “this complex parsing function probably does not properly handle all edge cases when processing untrusted user data. I’ll write a fuzzer to test edge cases and conduct manual analysis through code auditing and live debugging.”

Security research begins to resemble traditional software development as we systematically define next actions throughout this process. Technical leads can use Kanban boards and other project management tools to track progress and define milestones.

Iteration and the Importance of Reproducibility

Test results might either support or contradict a hypothesis. For example, if we find a crash, it does not mean the bug is exploitable or that the vulnerability exists in all configurations. Further investigation is necessary. Conversely, if we do not find a crash, there might be a flaw in our test, such as some missed guard logic that prevented our fuzzer from reaching a vulnerable code path. These potential inconsistencies are why documentation and reproducibility are as vital to security research as they are to any other scientific discipline.

Extremely detailed documentation is only sometimes necessary, but engineers should always take sufficient notes on their progress and capture any tooling, source code, or analysis databases to enable another engineer to validate or invalidate results. These results influence the next iteration of the analysis. This process can continue indefinitely; therefore, it is up to technical leads to regularly perform a cost-benefit analysis to ensure that, given any time or budgetary constraints, the research engineers perform has a reasonable chance of success.

A Framework for Developing Intuition

Intuition is an indispensable part of security research; however, intuition is an abstraction over the processes many of us take for granted. The Scientific Method exposes how we think about solving problems. By defining our thought processes, we can refine them and more effectively teach those we mentor. The Scientific Method clarifies a pathway to the end goal: finding vulnerabilities. It provides a roadmap that is usable at the team level and by individual contributors.

By applying the Scientific Method to our work, technical leads can break down open-ended projects, define next actions, and turn them into discrete tasks they can assign to other engineers. Concurrently, individual contributors develop the ability to figure out what to do next without overly relying on experienced engineers for direction. The Scientific Method provides a framework for developing intuition, enhancing collaboration, reducing inconsistencies in research methods, and promoting the reproducibility of findings.

Comments